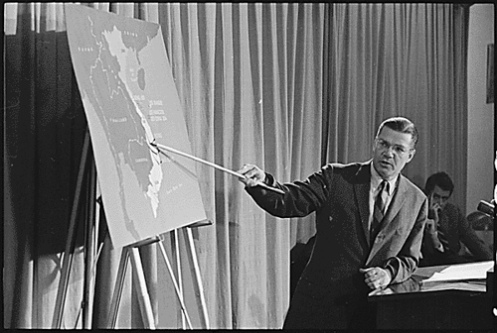

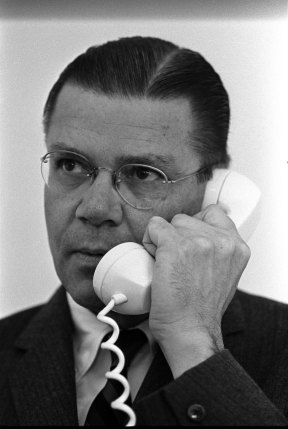

Robert McNamara was by any standards, a wildly successful man. Harvard graduate, president of Ford motors then rising to the heights of U.S. Secretary of Defense in the 1960s, McNamara epitomised American élan and brio. But he had one major flaw – he saw the world in numbers.

During the Vietnam War, McNamara employed a strategic method he had successfully used during his days at Ford where he created data points for every element of production and quantified everything in a ruthless fashion to improve efficiency and production. One of the main metrics he used to evaluate progress and inform strategy was body counts. “Things you can count, you ought to count,” claimed McNamara, “loss of life is one.”

The problem with this method was that the Vietnam war was characterised by the unmeasurable chaos of human conflict not the definable production of parts on a factory assembly line. Things spun out of control as McNamara’s statistical method failed to take into account numerous unseen variables and the public turned against US involvement in the war through a cultural outcry that would change the country. Although on paper America was ‘winning’ the war, ultimately they lost it.

As the war became more and more untenable, McNamara had to increasingly justify his methods. Far from providing an objective clarity, his algorithmic approach gave a misleading picture of what was becoming an unfathomably complex situation. In a 1967 speech he said that:

“It is true enough that not every conceivable complex human situation can be fully reduced to the lines on a graph, or to percentage points on a chart, or to figures on a balance sheet, but all reality can be reasoned about. And not to quantify what can be quantified is only to be content with something less than the full range of reason.”

While there is some merit to this approach in certain situations, there is a deeply hubristic arrogance in the reduction of complex human processes to statistics, an aberration which led the sociologist Daniel Yankelovitch coining the term the “McNamara fallacy”:

1. Measure whatever can be easily measured.

2. Disregard that which cannot be measured easily.

3. Presume that which cannot be measured easily is not important.

4. Presume that which cannot be measured easily does not exist.

Sadly, some of these tenets will be recognisable to many of us in education – certainly the first two are consistent with many aspects of standardised testing, inspections and graded lesson observations. This fiscal approach been allowed to embed itself in education with the justification given often to ‘use data to drive up standards.’ What we should be doing is using “standards to drive up data” as Keven Bartle reminds us.

The fallacy is based on the misguided notion that you can improve something by consistently measuring it. In the classroom, this is best illustrated by the conflation between learning and performance, which For Robert Bjork, are two very different things – the former is almost impossible to measure, the latter much simpler. It is very easy to transpose observable performance onto a spreadsheet and so that has become the metric used to measure pupil achievement and concomitantly, teacher performance. In tandem with that you’ve had the hugely problematic grading of lesson observations on a linear scale against often erroneous criteria as Greg Ashman has written about here.

Two years after the Vietnam war ended, Douglas Kinnard, published a significant study called The War Managers in which almost every US general interviewed said that the metric of body counts were a totally misguided way of measuring progress. One noted that they were “grossly exaggerated by many units primarily because of the incredible interest shown by people like McNamara.”

In education, the ‘incredible interest’ of the few over the many is having a disastrous impact in many areas. One inevitable endpoint of a system that audits itself in terms of numbers and then makes high-stakes decisions based on that narrow measurement is the wilful manipulation of those numbers. A culture that sees pupils as numbers and reduces the complex relational process of teaching to data points on a spreadsheet will ultimately become untethered from the moral and ethical principles that are at the heart of the profession, as the recent Atlanta cheating scandal suggests.

Even in the field of education research, there is a dangerous view in some quarters that the only game in town is a randomised controlled trial (its inherent problems have been flagged up by people like Dylan Wiliam.) If the only ‘evidence’ in evidence based practice is that which can be measured through this dollars and cents approach then we are again risking the kind of blind spots associated with the McNamara fallacy.

Teaching is often an unfathomable enterprise that is relational in essence, and resists the crude measures often imposed upon it. There should be more emphasis on phronesis or discretionary practitioner judgement that is informed by a deep subject knowledge, a set of ethical and philosophical principles and quality research/sustained inquiry into complex problems.

In my experience, the most important factors in great teaching are almost unmeasurable in numbers. The best teachers I know have a set of common characteristics:

1. They are not only very knowledgable about their subject but they are almost unreasonably passionate about it – something which is infectious for kids.

2. They create healthy relationships with those students in a million subtle ways, which are not only unmeasurable but often invisible to those involved.

3. They view teaching as an emancipatory enterprise which informs/guides everything they do. They see it as the most important job in the world and feel it’s a privilege to stand in a room with kids talking about their passion.

Are these things measurable in numbers and is it even appropriate to do so? Are these things helped or hindered by the current league table culture?

Robert McNamara died an old man and had opportunity to reflect on his long life, most notably in the Academy award winning documentary The Fog of War. His obituary in the Economist records that:

“He was haunted by the thought that amid all the objective-setting and evaluating, the careful counting and the cost-benefit analysis, stood ordinary human beings. They behaved unpredictably.”

Measuring progress is important. We need to know what we are doing is having impact against another approach that might yield better outcomes, but the current fetish of crude numerical quantification in education is misleading and fundamentally inappropriate for the unpredictable nature of the classroom. We need better ways of recording the phenomenon of the classroom that captures more than simply test scores and arbitrary judgements on teachers, and seeks to impose an order where often there is none.

Leave a comment